RDM and FAIRness

#Research Data Management (RDM)

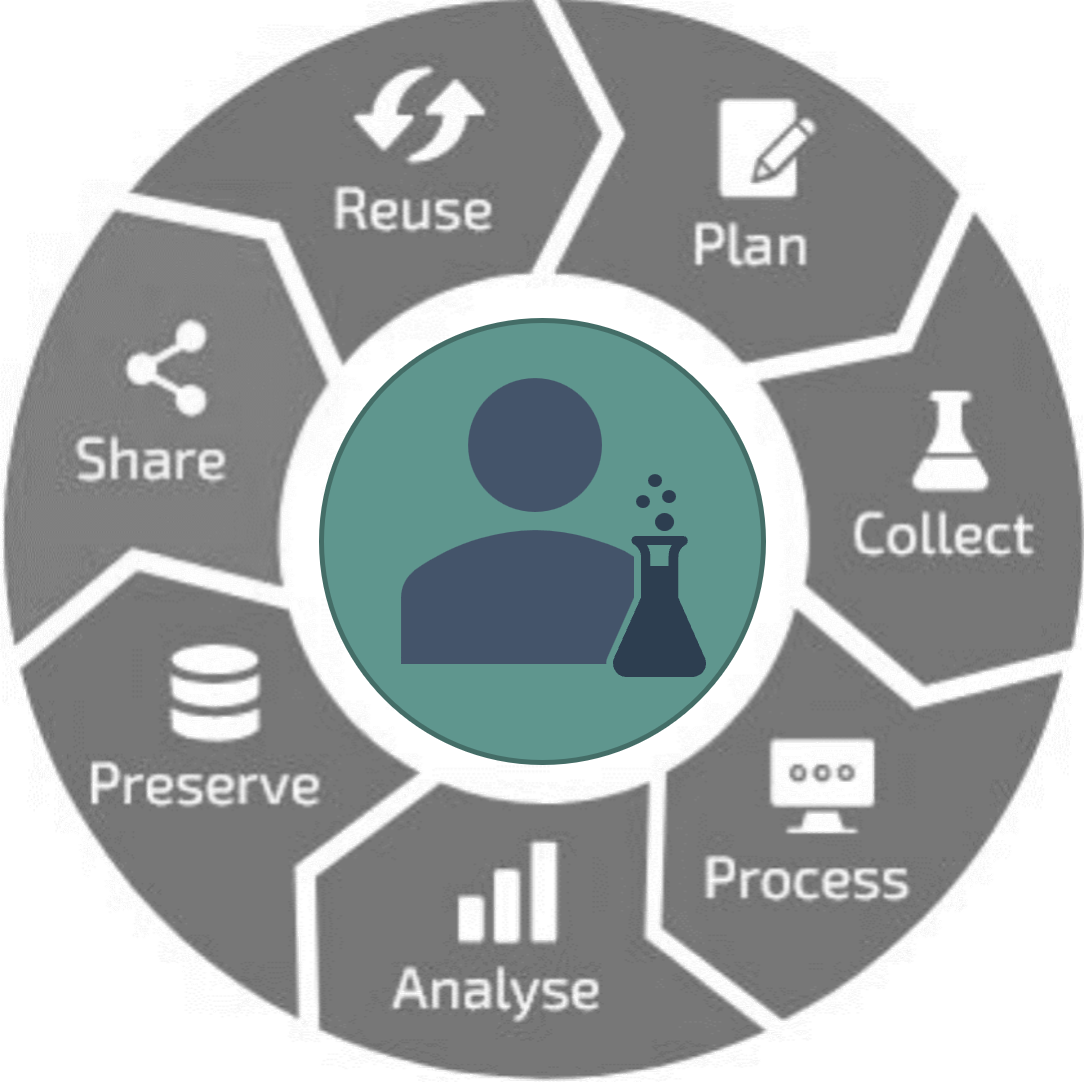

The ARC is designed to fully support Research Data Management ( RDM ) practices and facilitate participation in the evolving RDM ecosystem. RDM involves managing research data at every stage of its lifecycle, including:

- Collecting

- Organizing

- Documenting

- Processing

- Analyzing

- Storing

- Sharing

By integrating ARC into research workflows, researchers can effectively manage data across these stages, ensuring their research is well-organized, accessible, and reusable.

#Goals of Research Data Management (RDM):

- Enhancing research effectiveness and efficiency: Well-organized data accelerates the research process by making data easier to find, analyze, and reuse.

- Improving data security: Proper management helps to avoid data or metadata loss, ensuring that research outcomes are preserved over time.

- Preventing errors: Clear documentation and structuring minimize the risk of mistakes during data handling and analysis.

- Increasing research impact: High-quality data management ensures that datasets are shareable and reusable, boosting the visibility and impact of research.

- Ensuring reproducibility: Robust RDM practices make it easier to reproduce studies, which is critical for scientific credibility.

- Promoting data reusability: Properly managed data can be reused by other researchers, increasing its value beyond the original project.

#Open Data: The Path to Open Science

Publicly funded research is often expected to provide a return to society, and research data is frequently one of the most valuable outputs of these projects. Data reusability plays a crucial role in maximizing this return.

Sharing both research data and experimental protocols is essential for ensuring reproducibility. Journals and peer review processes should emphasize the requirement for sharing this data, reinforcing transparency in scientific endeavors. Open data not only supports reproducibility but also accelerates scientific discovery by making information accessible to a wider community.

#Data Publication: Solving the Data Reuse Problem

Traditionally, researchers are evaluated based on the knowledge they publish in scientific journals. However, the data behind these publications also hold substantial value.

- Reusability: Data can be reused to generate further insights, validate findings, or support new research directions.

- Re-examination: As new techniques or theories emerge, existing data may need to be revisited.

- Evolution of conclusions: Scientific conclusions can change or evolve when additional data is considered.

Unfortunately, in traditional publications, data often remain buried in supplemental materials or summarized in figures, making it difficult to reuse. This approach does not scale well with the growing volumes of research data, which needs to be findable, accessible, and usable in more structured ways.

#Data as a Primary Product in Science

Data itself is a primary product of scientific research, holding significant intrinsic value. It can be:

- Reused to drive new discoveries and insights

- Re-examined when novel techniques or theories arise

- Reintegrated with new datasets to refine or revise conclusions

However, data must be treated as atomic facts—core pieces of information that can stand alone or combine with other datasets to offer new knowledge. When managed correctly, data transcends its role in a single project, becoming a long-term asset for the broader scientific community.

#FAIR Digital Objects (FDOs) and Data Publication

The concept of Fair Digital Objects (FDOs) is becoming central to data publication. Classical publications are beginning to reference FDOs as standalone data publications, enabling data to be shared independently of traditional articles.

The FAIR Data Principles (Findable, Accessible, Interoperable, Reusable) outline how data packages should be structured to ensure they are useful for both humans and machines. Adhering to these principles is increasingly required by funding agencies and is essential for making research data valuable and sustainable.

- Findable: Data should be easy to locate, indexed, and searchable.

- Accessible: Data should be retrievable through open, standardized protocols.

- Interoperable: Data should be compatible with other datasets, enabling integration.

- Reusable: Data should be well-documented, structured, and licensed for reuse.

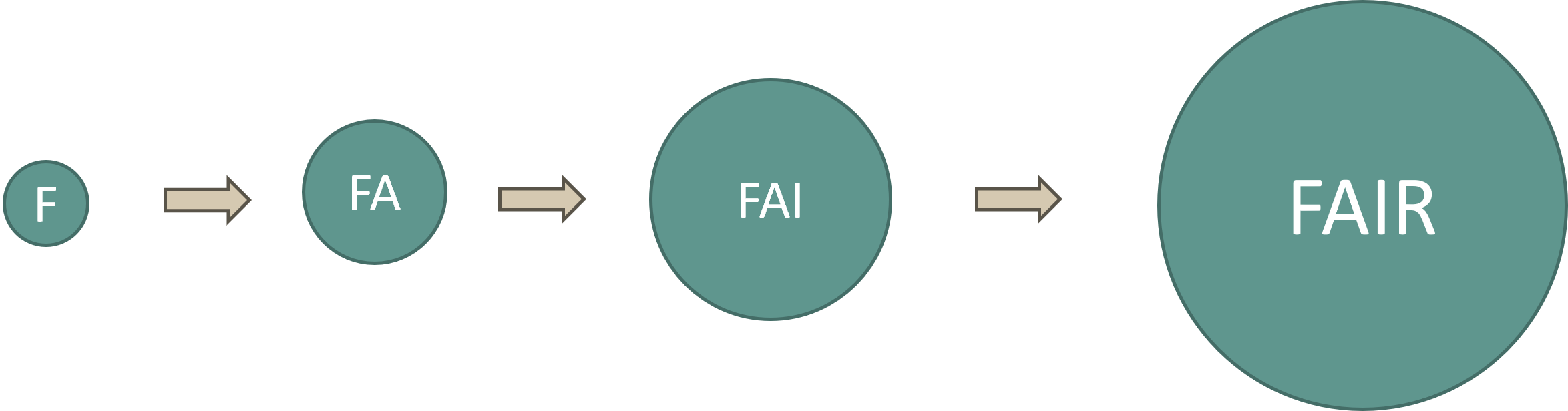

Producing FDOs that meet FAIR standards is always worthwhile. FAIRness is a continuum, and researchers can incrementally improve their data packages towards greater compliance. The ARC embraces this philosophy by supporting continuous improvement toward FAIRness.

#FAIRness as an Ongoing Process

FAIRness can be progressively enhanced over time. It represents a balance between the agile development of research data and the need for scholarly stability. The ARC supports this philosophy by providing researchers with the tools and structure to improve their data’s FAIRness gradually, ensuring maximum value for both the individual researcher and the broader scientific community.

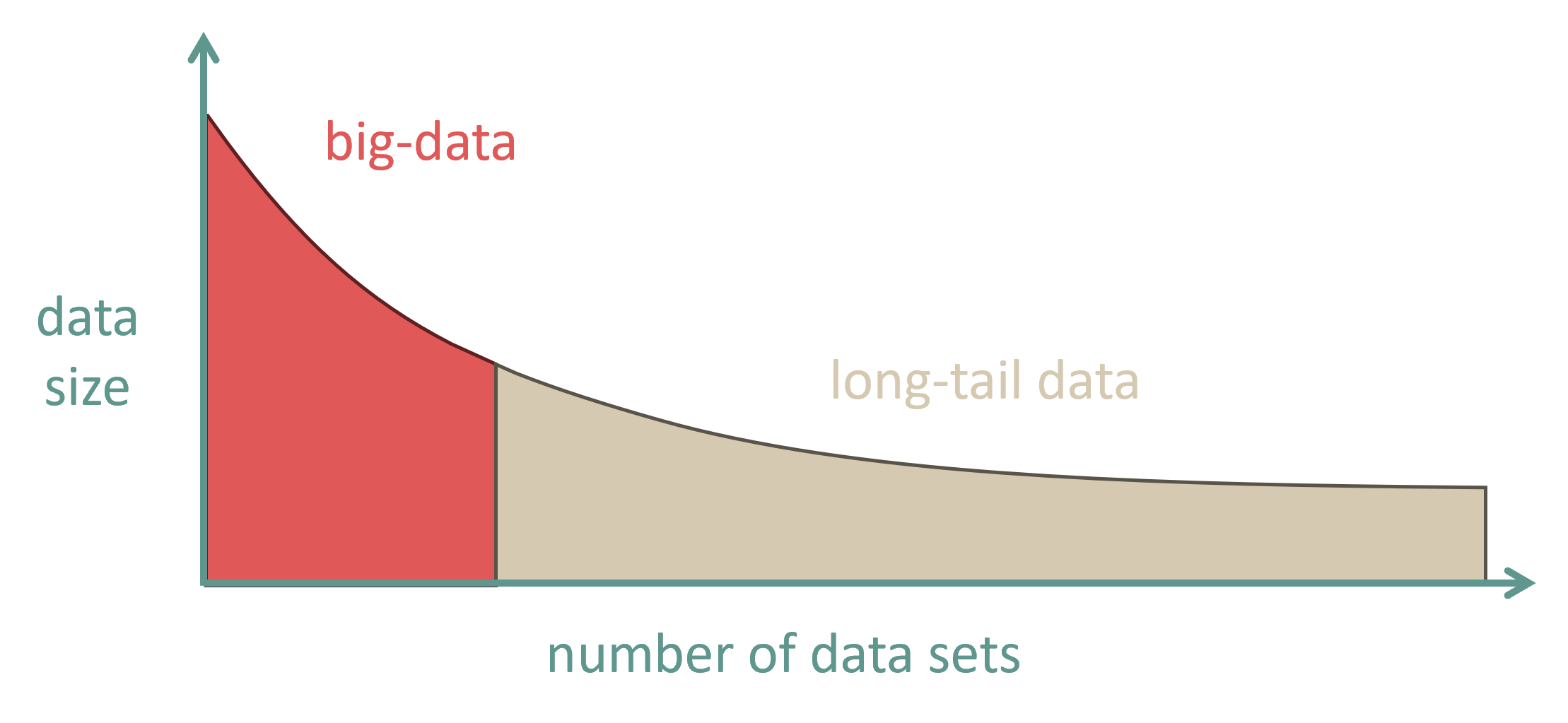

#“Small Data Done Right” is Big Data

When data are well-managed and shared effectively, even small datasets can contribute to large-scale scientific efforts. Combining data across studies requires data-sharing practices that extend beyond the lifespan of individual projects. This reinforces the idea that research done right is teamwork by default, and well-structured, shareable data is a cornerstone of collaborative science.

By embracing these principles, ARC fosters a research environment that enhances RDM practices, promotes open science, and supports the continuous improvement of data FAIRness, driving the scientific community forward.