ARC Data Hub: Bring your ARCs to the cloud

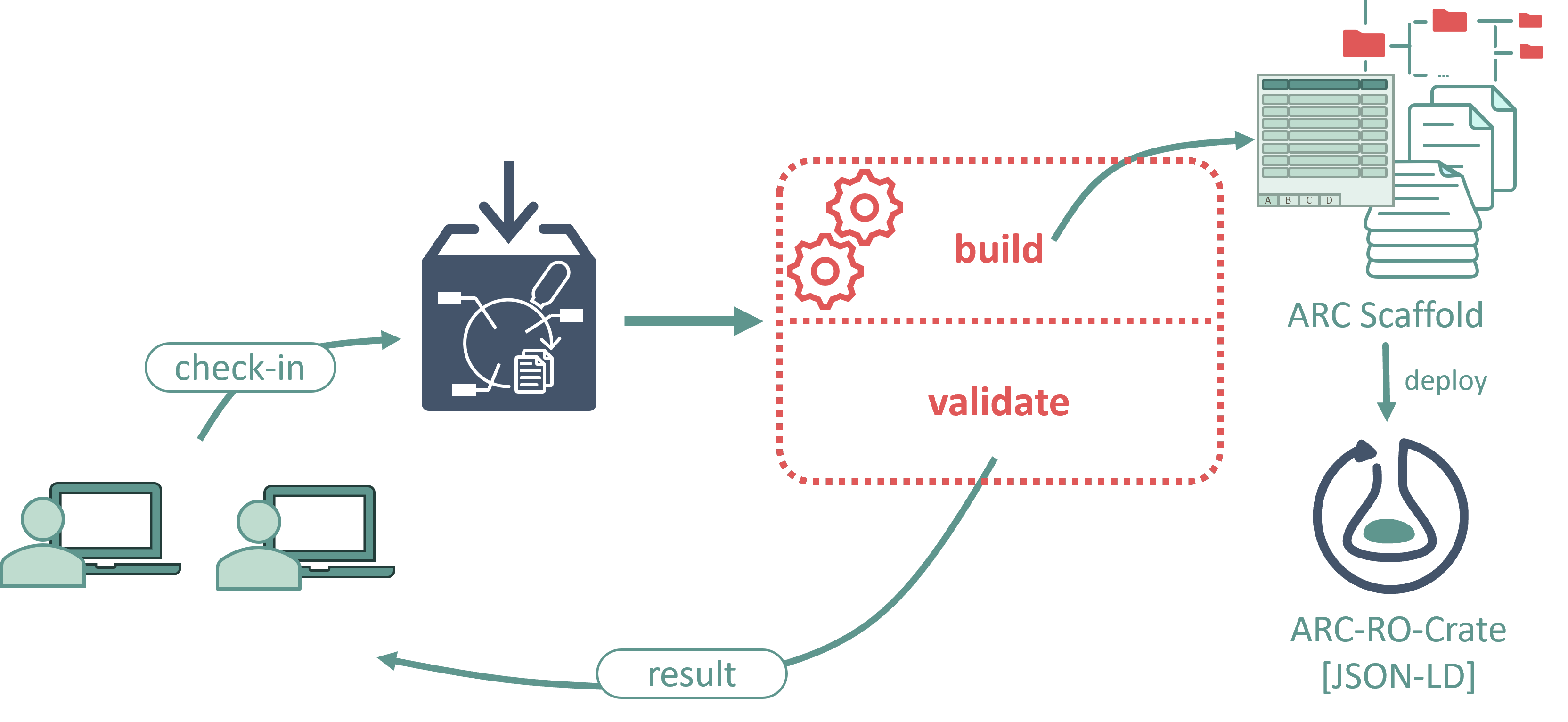

The ARC Data Hub concept applies the software development principles of Continuous Integration (CI) and Continuous Deployment (CD) to the research data management ( RDM ) framework provided by ARCs, making ARCs first-class citizens in the cloud. ARCs can be continuously validated, built, and deployed much like software. By using CI/CD for a set of subsequently defined tasks, many collaborative cloud platforms such as GitLab, GitHub, or Bitbucket can be used to build an ARC Data Hub.

#Continuous Deployment

CD can be used to continuously deploy ARC artifacts such as metadata export formats, computational results, etc. to another environment.

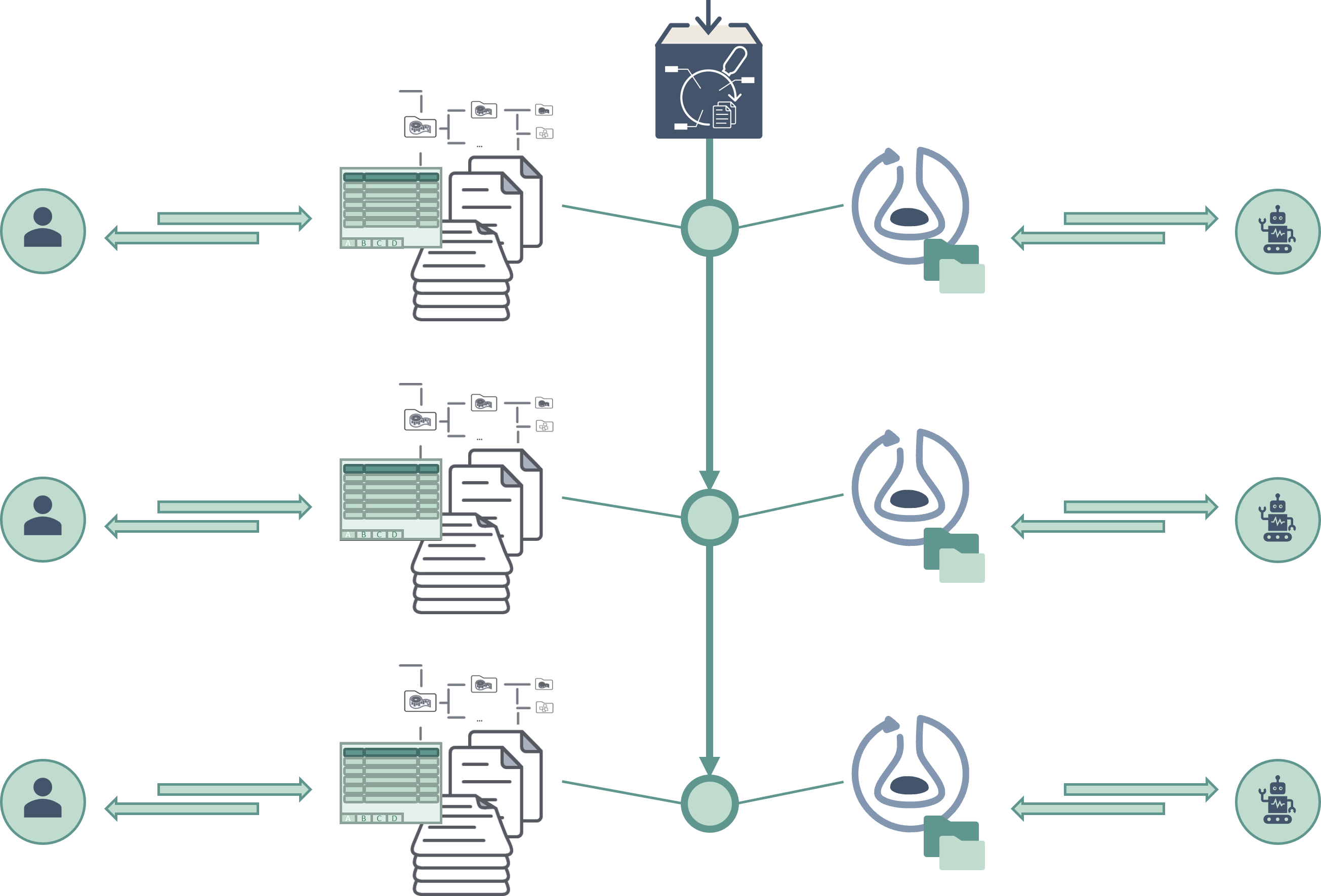

ARC Data Hubs use CD to build and deploy the ARC-RO-Crate metadata of each commit to a central package registry. This way, both representations of the ARC are always in sync and accessible, deploying both a user-centric and a machine-readable view on the ARC.

#Continuous Integration

Incremental changes on ARCs can be used to trigger integrations. ARC Data Hubs can run a user-selected set of validation packages on each commit or pull request to verify that an ARC is still valid for the targets of choice after the change is done .

Furthermore, the validation package output can be used to continuously inform the user about the current state of the ARC, for example by creating badges on the ARC page, much like the widely known build and test badges in software development.

#Continuous Quality Control

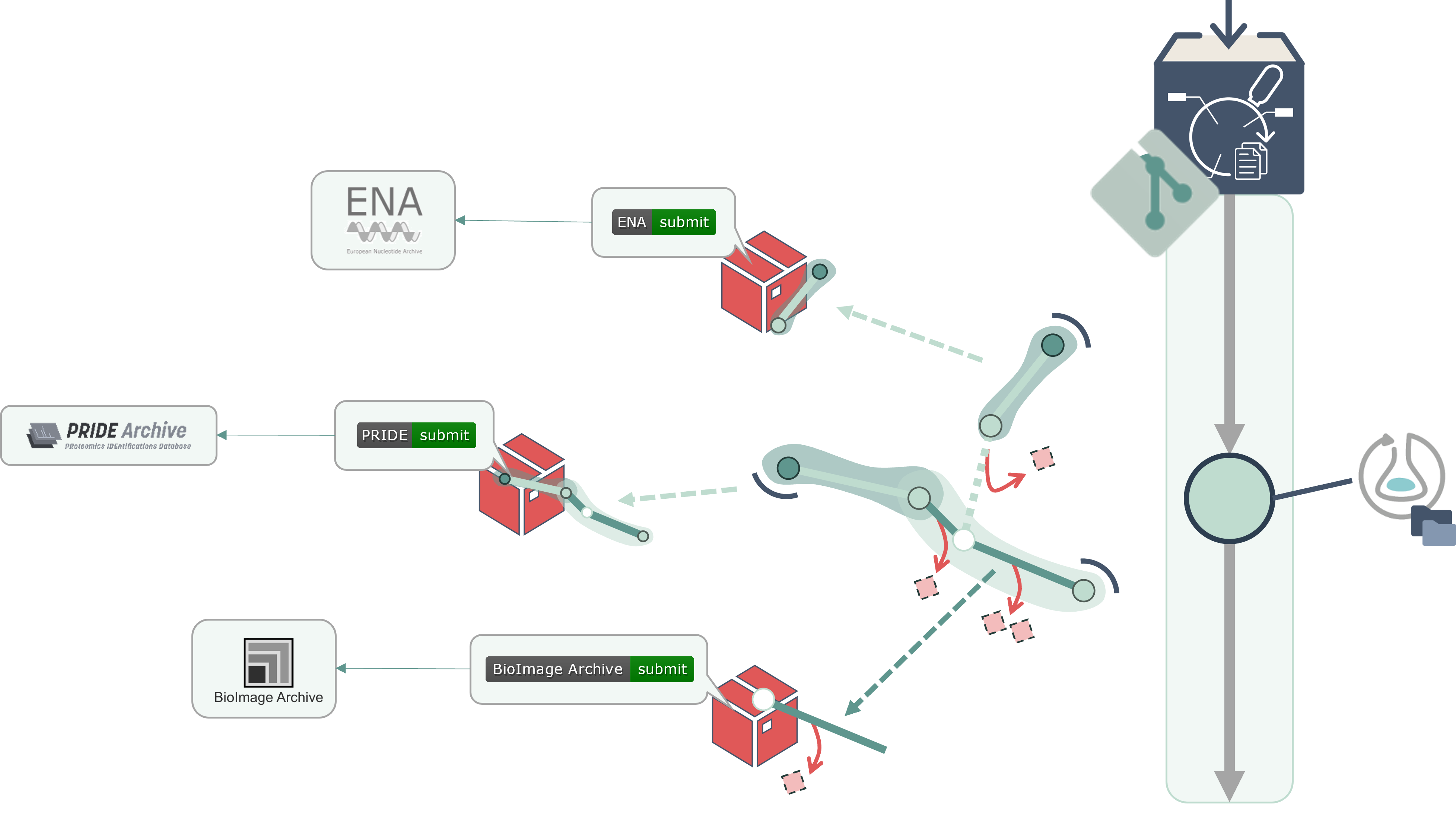

Continuous Quality Control (CQC) is a combination of CI and CD that integrates external services depending on the result of ARC validation. Successful validation can trigger downstream applications, either automatically or manually via CQC Hooks.

The PLANTdataHUB serves as a reference implementation of an ARC Data Hub, centrally hosted by the NFDI DataPLANT for the plant research community. Beyond its core functionality as an ARC Data Hub, it incorporates CQC within the data publication pipeline, ensuring that all required metadata for publication is complete and accurate.

CQC also supports submissions to various endpoint repositories, provided the corresponding validation package and downstream submission application are available. This flexible system ensures that ARC submissions meet the necessary standards for different repositories, enabling seamless integration and data sharing across platforms.